Guide to Working With JSON in SQL 2016 Part 2 - Output and Format

/In part 2 of this series we are going to explore the process of getting data out of a SQL database and in to a usable JSON document. Like FOR XML in the past, Microsoft introduced the FOR JSON clause to format the result of a query as a JSON document. If you want to produce a flat document the FOR JSON clause works just fine for that, but most documents are not flat and will contain nested objects and arrays so we need to know how to deal with that. That will be the focus of this post.

Part of the trick to outputting a meaningful JSON document is understanding the limitations and behaviors of the FOR JSON clause and how to work around them. We will explain in the examples below.

First we need to create our tables that represent the relational data.

-- Create the relational tables CREATE TABLE #Products ( ProductNumber int ,ProductName varchar(25) ,ProductType varchar(20) ,Runtime smallint ); INSERT INTO #Products SELECT 45975, 'Spiderman', 'Movie', 110 INSERT INTO #Products SELECT 96587, 'Batman', 'Movie', 98 INSERT INTO #Products SELECT 25893, 'SuperMan', 'Movie', 102 -- For releases and assets, only include records for a single movie to keep it simple CREATE TABLE #Releases ( Country varchar(30) ,HomeReleaseDate datetime2(0) ,ProductNumber int ) INSERT INTO #Releases SELECT 'United States', DATEADD(dd, -329, GETDATE()), 96587; INSERT INTO #Releases SELECT 'France', DATEADD(dd, -256, GETDATE()), 96587; INSERT INTO #Releases SELECT 'Germany', DATEADD(dd, -215, GETDATE()), 96587; CREATE TABLE #Assets ( ProductNumber int ,Languages varchar(20) ,TitleName varchar(50) ) INSERT INTO #Assets SELECT 96587, 'English', 'Batman'; INSERT INTO #Assets SELECT 96587, 'French', 'LeBat'; INSERT INTO #Assets SELECT 96587, 'German', 'Bachman';

Now we need to create a table where we can combine our results. We need to produce multiple JSON outputs for a single record and then combine all of those outputs. We are going to create our combine table and then insert the first record. We will come back and update this record with supporting data for the final output.

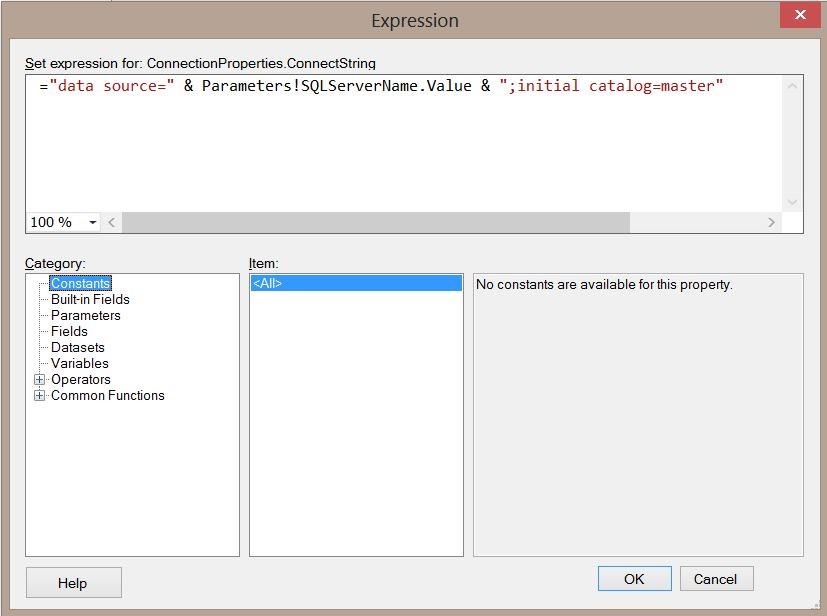

CREATE TABLE #Combine ( Product nvarchar(max) ,Releases nvarchar(max) ,Assets nvarchar(max) ) INSERT INTO #Combine (Product) VALUES (( SELECT ProductNumber ,ProductName ,ProductType ,Runtime FROM #Products WHERE ProductNumber = 96587 FOR JSON PATH, WITHOUT_ARRAY_WRAPPER))

The product field in the #Combine table represents a JSON document for the product 96587, Batman. The releases field represents an array of embedded objects that is part of the product document, the same with assets. We are producing three different related JSON results and then combining them in to one. The JSON PATH clause by itself cannot output embedded documents so we have to do this manually (well actually it can, kind of, if you use ROOT, but you don't have much control of the output and when dealing with multiple table joins, it does what it wants, which is not likely what you want). Also to note, in the FOR JSON PATH above we use the option WITHOUT_ARRAY_WRAPPER. This eliminates the square brackets from the JSON output. We only want to exclude this for the parent document. Now we are going to update the other fields and take a look at what we have so far.

UPDATE #Combine

SET Releases = (

SELECT

ProductNumber

,Country

,HomeReleaseDate

FROM #Releases

FOR JSON PATH, ROOT('Releases'))

UPDATE #Combine

SET Assets = (

SELECT

Languages

,TitleName

FROM #Assets

FOR JSON AUTO, ROOT('Assets') )

SELECT * FROM #CombineSo we effectively have 3 JSON documents we need to combine in to a single document. To do this we use the following.

SELECT STUFF(Product,LEN(Product),1, ',') + STUFF(STUFF(Releases, 1, 1, ''), LEN(Releases) - 1, 1, ',') + STUFF(Assets, 1, 1, '') FROM #Combine

The query above doesn't do anything special except combine the documents. Copy the result and paste it in to a JSON validator to see the end result.

It's not pretty but it works. Would I use it in production? Probably not. Keep in mind this is only version 1.0, hopefully we will see improvements to this in future releases. We can hope that Microsoft follows in the footsteps of Postgres, with a true native data type. Or of course you could do all of this in an application just as easily.